Creating your own cosmological likelihood class

Creating new internal likelihoods or external likelihood classes should be straightforward. For simple cases you can also just define a likelihood function (see Creating your own cosmological likelihood).

Likelihoods should inherit from the base likelihood.Likelihood class, or one of the existing extensions.

Note that likelihood.Likelihood inherits directly from theory.Theory, so likelihood and

theory components have a common structure, with likelihoods adding specific functions to return the likelihood.

A minimal framework would look like this

from cobaya.likelihood import Likelihood

import numpy as np

import os

class MyLikelihood(Likelihood):

def initialize(self):

"""

Prepare any computation, importing any necessary code, files, etc.

e.g. here we load some data file, with default cl_file set in .yaml below,

or overridden when running Cobaya.

"""

self.data = np.loadtxt(self.cl_file)

def get_requirements(self):

"""

return dictionary specifying quantities calculated by a theory code are needed

e.g. here we need C_L^{tt} to lmax=2500 and the H0 value

"""

return {'Cl': {'tt': 2500}, 'H0': None}

def logp(self, **params_values):

"""

Taking a dictionary of (sampled) nuisance parameter values params_values

and return a log-likelihood.

e.g. here we calculate chi^2 using cls['tt'], H0_theory, my_foreground_amp

"""

H0_theory = self.provider.get_param("H0")

cls = self.provider.get_Cl(ell_factor=True)

my_foreground_amp = params_values['my_foreground_amp']

chi2 = ...

return -chi2 / 2

You can also implement an optional close method doing whatever needs to be done at the end of the sampling (e.g. releasing memory).

The default settings for your likelihood are specified in a MyLikelihood.yaml file in the same folder as the class module, for example

cl_file: /path/do/data_file

# Aliases for automatic covariance matrix

aliases: [myOld]

# Speed in evaluations/second (after theory inputs calculated).

speed: 500

params:

my_foreground_amp:

prior:

dist: uniform

min: 0

max: 100

ref:

dist: norm

loc: 153

scale: 27

proposal: 27

latex: A^{f}_{\rm{mine}}

When running Cobaya, you reference your likelihood in the form module_name.ClassName. For example,

if your MyLikelihood class is in a module called mylikes your input .yaml would be

likelihood:

mylikes.MyLikelihood:

# .. any parameters you want to override

If your class name matches the module name, you can also just use the module name.

Note that if you have several nuisance parameters, fast-slow samplers will benefit from making your likelihood faster even if it is already fast compared to the theory calculation. If it is more than a few milliseconds consider recoding more carefully or using numba where needed.

Many real-world examples are available in cobaya.likelihoods, which you may be able to adapt as needed for more complex cases, and a number of base class are pre-defined that you may find useful to inherit from instead of Likelihood directly.

There is no fundamental difference between internal likelihood classes (in the Cobaya likelihoods package) or those

distributed externally. However, if you are distributing externally you may also wish to provide a way to

calculate the likelihood from pre-computed theory inputs as well as via Cobaya. This is easily done by extracting

the theory results in logp and them passing them and the nuisance parameters to a separate function,

e.g. log_likelihood where the calculation is actually done. For example, adapting the example above to:

class MyLikelihood(Likelihood):

...

def logp(self, **params_values):

H0_theory = self.provider.get_param("H0")

cls = self.provider.get_Cl(ell_factor=True)

return self.log_likelihood(cls, H0, **params_values)

def log_likelihood(self, cls, H0, **data_params)

my_foreground_amp = data_params['my_foreground_amp']

chi2 = ...

return -chi2 / 2

You can then create an instance of your class and call log_likelihood, entirely independently of

Cobaya. However, in this case you have to provide the full theory results to the function, rather than using the self.provider to get them

for the current parameters (self.provider is only available in Cobaya once a full model has been instantiated).

If you want to call your likelihood for specific parameters (rather than the corresponding computed theory results), you need to call get_model() to instantiate a full model specifying which components calculate the required theory inputs. For example,

packages_path = '/path/to/your/packages'

info = {

'params': fiducial_params,

'likelihood': {'my_likelihood': MyLikelihood},

'theory': {'camb': None},

'packages': packages_path}

from cobaya.model import get_model

model = get_model(info)

model.logposterior({'H0':71.1, 'my_param': 1.40, ...})

Input parameters can be specified in the likelihood’s .yaml file as shown above.

Alternatively, they can be specified as class attributes. For example, this would

be equivalent to the .yaml-based example above

class MyLikelihood(Likelihood):

cl_file = "/path/do/data_file"

# Aliases for automatic covariance matrix

aliases = ["myOld"]

# Speed in evaluations/second (after theory inputs calculated).

speed = 500

params = {"my_foreground_amp":

{"prior": {"dist": "uniform", "min": 0, "max": 0},

"ref" {"dist": "norm", "loc": 153, "scale": 27},

"proposal": 27,

"latex": r"A^{f}_{\rm{mine}"}}

If your likelihood has class attributes that are not possible input parameters, they should be made private by starting the name with an underscore.

Any class can have class attributes or a .yaml file, but not both. Class

attributes or .yaml files are inherited, with re-definitions override the inherited value.

Likelihoods, like Theory classes, can also provide derived parameters. To do this use the special

_derived dictionary that is passed in as an argument to logp. e.g.

class MyLikelihood(Likelihood):

params = {'D': None, 'Dx10': {'derived': True}}

def logp(self, _derived=None, **params_values):

if _derived is not None:

_derived["Dx10"] = params_values['D'] * 10

...

Alternatively, you could implement the Theory-inherited calculate method,

and set both state['logp'] and state['derived'].

If you distribute your package on pypi or github, any user will have to install your package before using it. This can be done automatically from an input yaml file (using cobaya-install) if the user specifies a package_install block. For example, the Planck NPIPE cosmology likelihoods can be used from a yaml block

likelihood:

planck_2018_lowl.TT_native: null

planck_2018_lowl.EE_native: null

planck_NPIPE_highl_CamSpec.TTTEEE: null

planckpr4lensing:

package_install:

github_repository: carronj/planck_PR4_lensing

min_version: 1.0.2

Running cobaya-install on this input will download planckpr4lensing from

github and then pip install it (since it is not an internal package provided with Cobaya, unlike the other likelihoods). The package_install block can instead use download_url for a zip download, or just “pip” to install from pypi. The options are the same as the install_options for installing likelihood data using the InstallableLikelihood class described below.

Automatically showing bibliography

You can store bibliography information together with your class, so that it is shown when

using the cobaya-bib script, as explained in this section. To do

that, you can simply include the corresponding bibtex code in a file named as your class

with .bibtex extension placed in the same folder as the module defining the class.

As an alternative, you can implement a get_bibtex class method in your class that

returns the bibtex code:

@classmethod

def get_bibtex(cls):

from inspect import cleandoc # to remove indentation

return cleandoc(r"""

# Your bibtex code here! e.g.:

@article{Torrado:2020dgo,

author = "Torrado, Jesus and Lewis, Antony",

title = "{Cobaya: Code for Bayesian Analysis of hierarchical physical models}",

eprint = "2005.05290",

archivePrefix = "arXiv",

primaryClass = "astro-ph.IM",

reportNumber = "TTK-20-15",

doi = "10.1088/1475-7516/2021/05/057",

journal = "JCAP",

volume = "05",

pages = "057",

year = "2021"

}""")

InstallableLikelihood

This supports the default data auto-installation. Just add a class-level string specifying installation options, e.g.

from cobaya.likelihoods.base_classes import InstallableLikelihood

class MyLikelihood(InstallableLikelihood):

install_options = {"github_repository": "MyGithub/my_repository",

"github_release": "master"}

...

You can also use install_options = {"download_url":"..url.."}

DataSetLikelihood

This inherits from InstallableLikelihood and wraps loading settings from a .ini-format .dataset file giving setting related to the likelihood (specified as dataset_file in the input .yaml).

from cobaya.likelihoods.base_classes import DataSetLikelihood

class MyLikelihood(DataSetLikelihood):

def init_params(self, ini):

"""

Load any settings from the .dataset file (ini).

e.g. here load from "cl_file=..." specified in the dataset file

"""

self.cl_data = np.load_txt(ini.string('cl_file'))

...

CMBlikes

This the CMBlikes self-describing text .dataset format likelihood inherited from DataSetLikelihood (as used by the Bicep and Planck lensing likelihoods). This already implements the calculation of Gaussian and Hammimeche-Lewis likelihoods from binned \(C_\ell\) data, so in simple cases you don’t need to override anything, you just supply the .yaml and .dataset file (and corresponding references data and covariance files). Extensions and optimizations are welcome as pull requests.

from cobaya.likelihoods.base_classes import CMBlikes

class MyLikelihood(CMBlikes):

install_options = {"github_repository": "CobayaSampler/planck_supp_data_and_covmats"}

pass

For example native (which is installed as an internal likelihood) has this .yaml file

# Path to the data: where the planck_supp_data_and_covmats has been cloned

path: null

dataset_file: lensing/2018/smicadx12_Dec5_ftl_mv2_ndclpp_p_teb_consext8.dataset

# Overriding of .dataset parameters

dataset_params:

# Overriding of the maximum ell computed

l_max:

# Aliases for automatic covariance matrix

aliases: [lensing]

# Speed in evaluations/second

speed: 50

params: !defaults [../planck_2018_highl_plik/params_calib]

The description of the data files and default settings are in the dataset file.

The bicep_keck_2018 likelihood provides a more complicated model that adds methods to implement the foreground model.

This example also demonstrates how to share nuisance parameter settings between likelihoods: in this example all the

Planck likelihoods depend on the calibration parameter, where here the default settings for that are loaded from the

.yaml file under planck_2018_highl_plik.

Real-world examples

The simplest example are the H0 likelihoods, which are just implemented as simple Gaussians.

For an examples of more complex real-world CMB likelihoods, see bicep_keck_2018 and the lensing likelihood shown above (both using CMBlikes format), or Planck2018CamSpecPython for a full Python implementation of the

multi-frequency Planck likelihood (based from DataSetLikelihood). The PlanckPlikLite

likelihood implements the plik-lite foreground-marginalized likelihood. Both the plik-like and CamSpec likelihoods

support doing general multipole and spectrum cuts on the fly by setting override dataset parameters in the input .yaml.

The provided BAO likelihoods base from BAO, reading from simple text files.

The DES likelihood (based from DataSetLikelihood) implements the DES Y1 likelihood, using the

matter power spectra to calculate shear, count and cross-correlation angular power spectra internally.

The example external CMB likelihood is a complete example of how to make a new likelihood class in an external Python package.

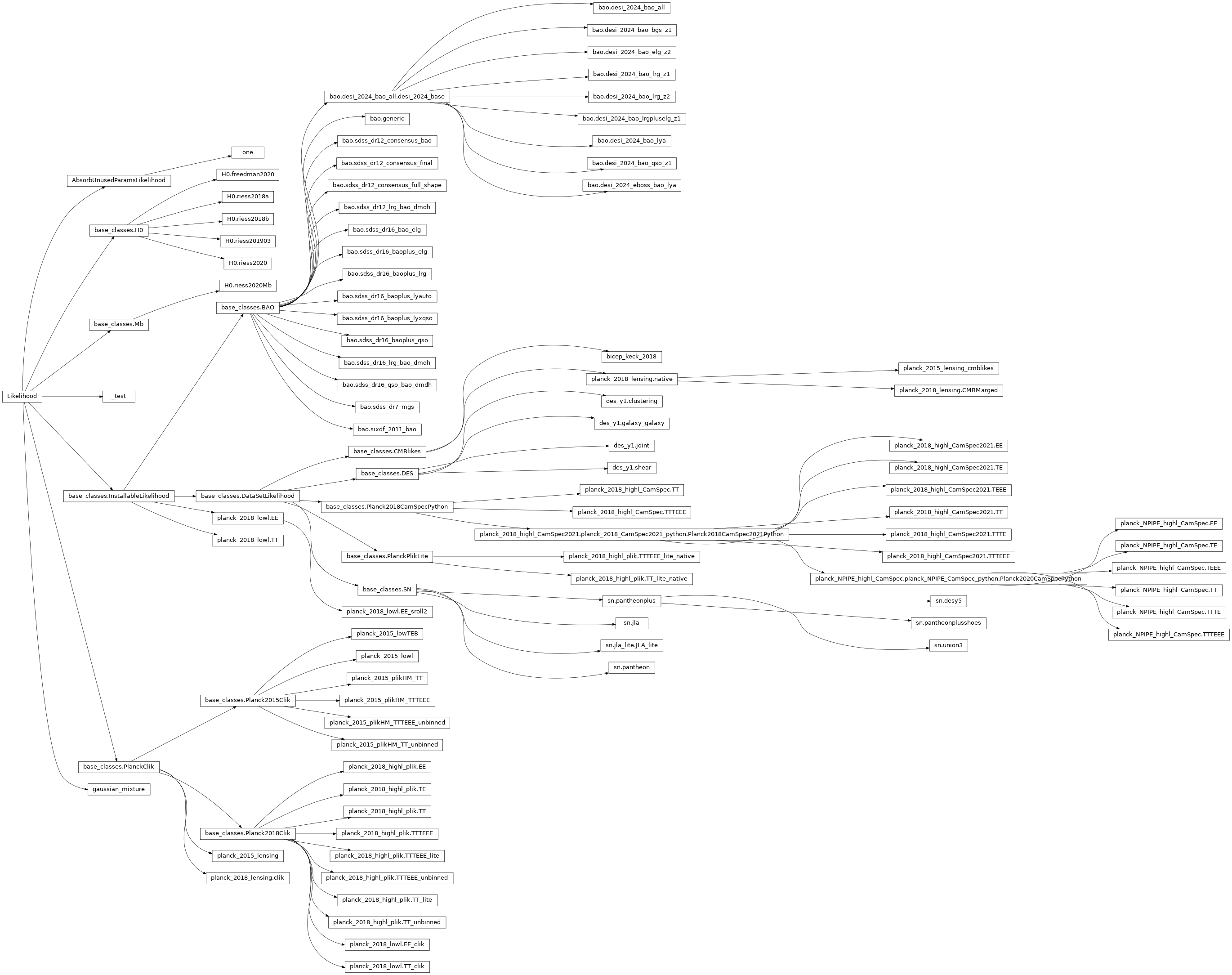

Inheritance diagram for internal cosmology likelihoods